DOI or URL of the report: https://osf.io/u7ghb?view_only=60cb55c3c3c74f76a8e170fb498e2789

Version of the report: v2

Please find (i) my response to the recommender and reviewer comments and (ii) the manuscript file with all changes tracked separately attached below. Thank you!

All files (including a clean version of the manuscript with all changes confirmed) are available as one document via https://osf.io/u7ghb?view_only=60cb55c3c3c74f76a8e170fb498e2789 (version 3).

, posted 16 Dec 2022, validated 16 Dec 2022

, posted 16 Dec 2022, validated 16 Dec 2022Thanks for the careful attention to the previous comments. Both reviewers returned to evaluate your revised manuscript, and I'm happy to say that both are broadly satisfied. You will see some remaining clarifications to address in the review from Sauer, after which I expect we can issue Stage 1 IPA.

I look forward to receiving your revised manuscript. Please note that due to the December shutdown at PCI RR, you won't be able to submit your revised manuscript until we reopen on January 3rd. I will action your manuscript as quickly as possible at that time.

The responses to the previous reviews are thoughtful, open, and concientious and have largely addressed my concerns. I have only a couple of relatively minor comments on the revised submission.

1. RQ2 and H2

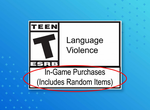

H2 currently reads "Video games previously known to be high-grossing and contain loot boxes and presently containing loot boxes on the Google Play Store will accurately display the IARC ‘In-Game Purchases (Includes Random Items)’ label".

I think there are a couple of issues here. First, the clause "previoulsy known to be high grossing" feels vague (e.g., feels like you'd need to operationalise "high grossing" and the manner through which this was "previously known"?). Second, the hypothesis is a bit wordy, and a little difficult to follow. Perhaps the hypothesis could be simplified? Maybe somthing like: "All titles in this sample of video games previously known to contain loot boxes, and available on the Google Play Store, will accurately display the IARC ‘In-Game Purchases (Includes Random Items)’ label"?

2. Representativeness

Given the revisions to the RQ2 and H2, the sample can now provide data capable of testing hte hypothesis, so that issue has been resolved. And the revised ms also explicitly considers the issues relating to the representativeness of the sample used, and I appreciate the openess here. The question, however, is will compliance data from this sample of very popular and highly scutinised games tell us something useful about compliance behaviour more generally? On the one hand, compliance may well be higher for these games than for other, less popular and less scrutinised games, and therefore the current investigation may produce a overestimate of compliance rates. On the other hand, these are the popular games; the games interacted with most by gamers. I've waivered back and forth on this issue while writing this review. Ultimately, I think, the proposed methodology/sample is suitable. Although it may overestimate compliance for the market as a whole, it addresses the titles most gamers play (and therefore provides data of broad enough relevance to be pratically useful) and I'm not unsympathetic to the arguments relating to expediency and efficiency (and the impracticality of assessing "all games").

3. 95% cut-off

I understand that any cut-off of this sort is going to be largely arbitrary and, as long as this is acknowledged, all is well.

4. Minecraft and Roblox

Interesting issue, eh? I think the proposed treatment of these titles is a sensible approach.

https://doi.org/10.24072/pci.rr.100317.rev21

Thanks to the researcher for addressing my comments in the previous round of reviewer. These have been done to a satisfactory standard, and I have no further suggestions to add. I note that there was some disagreement between myself and the second reviewer regarding how Minecraft and Roblox should be treated in Study 2; I think that the proposed solution has merit, and does a good job of balancing the need to treat these games as unusual entities with regards to loot box systems, while also considering them in the context of future avenues for research.

https://doi.org/10.24072/pci.rr.100317.rev22DOI or URL of the report: https://osf.io/u7ghb?view_only=60cb55c3c3c74f76a8e170fb498e2789

Version of the report: v1

Please find (i) my response to the recommender and reviewer comments and (ii) the manuscript file with all changes tracked separately attached below. Thank you!

All files (including a clean version of the manuscript with all changes confirmed) are available as one document via https://osf.io/u7ghb?view_only=60cb55c3c3c74f76a8e170fb498e2789 (version 2).

, posted 18 Nov 2022, validated 18 Nov 2022

, posted 18 Nov 2022, validated 18 Nov 2022I have now obtained two very helpful reviews of your submission. As you will see, both evaluations are cautiously positive while also noting various aspects of the design and rationale that would benefit from clarification or modification. Key issues to address include the testability of the hypotheses, the timeframe of the data extraction (with the useful suggestion by Etchells to use a broader and more principled range), and the justification of specific assumptions and elements of the rationale.

One particular issue that requires careful attention is whether the answer to RQ2 is a foregone conclusion given known information (noted by Sauer). In order to be eligible for consideration as a Registered Report at PCI RR, the conclusions of the research must not be known (or inferrable with certainty) before the study is conducted. Please consider this issue carefully and, in turn, whether the bias control level for your submission is set appropriately.

Based on these reviews, I am happy to invite a revision, which I will return to the reviewers for another look.

This is a well-considered and straightforward study, and I have no major concerns in general.

Study 1 carries with it a certain level of risk, given that the relevant games lists from both PEGI and and ESRB have already been collated, but I appreciate the necessity of this, and the author is honest in their reporting. However, I do wonder whether the time frame limitation is somewhat arbitrary. While I appreciate that using the entire database (c. 31000 games) is beyond the scope of the study, limiting to the year leading up to September 2022 could be better justified. As such then, I would suggest expanding the time frame scope to capture a broader range of games and be grounded in a sounder rationale. Given that the announcement to include the relevant warning tags came in April 2020, I wonder whether this would serve as a useful time point to start with. Expanding the time frame would also allay concerns that readers may have about the potential for 'data peeking'.

Study 2 seems well thought through, with a clear processing pipeline for gathering game data. I appreciated the level of detail regarding sampling method, but felt that this became a bit cumbersome with regards to Roblox and Minecraft. The author does an admirable job of providing a clear justification for scenarios where these two games (or a combination thereof) will or will not be included in the final sample, but I wonder whether it just makes sense to include them both anyway. Given the unique nature of these two games in terms of compliance issues, they serve well as test cases for future sandbox games that may run up against third-party content, and therefore would be well-placed to be included in such considerations.

https://doi.org/10.24072/pci.rr.100317.rev11