Exploring cross-cultural variation in speech and song

Similarities and differences in a global sample of song and speech recordings

Abstract

Recommendation: posted 17 January 2023, validated 17 January 2023

Chambers, C. (2023) Exploring cross-cultural variation in speech and song. Peer Community in Registered Reports, . https://rr.peercommunityin.org/PCIRegisteredReports/articles/rec?id=316

Related stage 2 preprints:

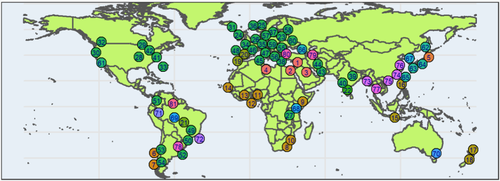

Yuto Ozaki, Adam Tierney, Peter Pfordresher, John Mcbride, Emmanouil Benetos, Polina Proutskova, Gakuto Chiba, Fang Liu, Nori Jacoby, Suzanne Purdy, Patricia Opondo, Tecumseh Fitch, Shantala Hegde, Martín Rocamora, Rob Thorne, Florence Ewomazino Nweke, Dhwani Sadaphal, Parimal Sadaphal, Shafagh Hadavi, Shinya Fujii, Sangbuem Choo, Marin Naruse, Utae Ehara, Latyr Sy, Mark Lenini Parselelo, Manuel Anglada-Tort, Niels Chr. Hansen, Felix Haiduk, Ulvhild Færøvik, Violeta Magalhães, Wojciech Krzyżanowski, Olena Shcherbakova, Diana Hereld, Brenda Suyanne Barbosa, Marco Antonio Correa Varella, Mark van Tongeren, Polina Dessiatnitchenko, Su Zar Zar, Iyadh El Kahla, Olcay Muslu, Jakelin Troy, Teona Lomsadze, Dilyana Kurdova, CristianoTsope, Daniel Fredriksson, Aleksandar Arabadjiev, Jehoshaphat Philip Sarbah, Adwoa Arhine, Tadhg Ó Meachair, Javier Silva-Zurita, Ignacio Soto-Silva, Neddiel Elcie Muñoz Millalonco, Rytis Ambrazevičius, Psyche Loui, Andrea Ravignani, Yannick Jadoul, Pauline Larrouy-Maestri, Camila Bruder, Tutushamum Puri Teyxokawa, Urise Kuikuro, Rogerdison Natsitsabui, Nerea Bello Sagarzazu, Limor Raviv, Minyu Zeng, Shahaboddin Dabaghi Varnosfaderani, Juan Sebastián Gómez-Cañón, Kayla Kolff, Christina der Nederlanden, Meyha Chhatwal, Ryan Mark David, I Putu Gede Setiawan, Great Lekakul, Vanessa Nina Borsan, Nozuko Nguqu, Patrick E. Savage

https://doi.org/10.31234/osf.io/jr9x7

Recommendation

List of eligible PCI RR-friendly journals:

The recommender in charge of the evaluation of the article and the reviewers declared that they have no conflict of interest (as defined in the code of conduct of PCI) with the authors or with the content of the article.

Reviewed by Nai Ding, 04 Dec 2022

Including the new dataset into the analysis will address my previous concerns. I look forward to seeing the final results.

https://doi.org/10.24072/pci.rr.100316.rev21Evaluation round #1

DOI or URL of the report: https://osf.io/ta2df?view_only=39565b55d1094555a5bb94235cd8e65c

Version of the report: v1

Author's Reply, 30 Nov 2022

Decision by Chris Chambers , posted 17 Nov 2022, validated 17 Nov 2022

, posted 17 Nov 2022, validated 17 Nov 2022

I have now obtained two very helpful expert evaluations of your submission. As you will see, the reviews are broadly positive while also raising a number of issues to consider in revision, including clarification of procedural and methodological details, and consideration of additional (or alternative) analyses.

There is one issue raised in the reviews that I anticipated based on my own reading, which is the validity of sampling exclusively from the team of co-authors. One of the reviewers writes: "...it's of course not ideal to only sample data from co-authors, since co-authors may differ from the randomly sampled participants in many different ways even if they are not aware of the hypotheses being tested. Why not just ask the co-authors to acquire some speech and songs in their native language and do a control analysis on those audios? This approach seems particularly plausible since the current manuscript mainly analyzed songs and spoken descriptions, which are not matched in the content." I think this suggestion is worthy of careful consideration. Concerning the appropriateness of including audio contributors who are not aware of the hypotheses of the study as co-authors, given the unusual nature of this work, I am happy that they continue as outlined provided they are fully informed at Stage 2 after results are known, and that they have the opportunity to contribute to the interpretration of the results in light of the predictions.

Overall, based on the reviews and my own reading, I believe your submission is a strong contender for eventual Stage 1 acceptance, and I would like to invite you to submit a revision and point-by-point response to the reviewers. I will likely send your revised manuscript back to at least one of the reviewers for another look.

Reviewed by Bob Slevc, 14 Nov 2022

This RR describes a plan, along with some pilot data, to collect samples of song and speech (and also of recited lyrics and instrumental music, for exploratory purposes) from a wide variety of language communities, and then analyze these on various dimensions to assess similarities and differences between speech and song.

I really like this overall idea and I think this is a great use of a registered report format. I was initially slightly disappointed that only a few potential dimensions of similarity/differences are included as confirmatory hypotheses, but I think the most valuable part of this project may really be the creation of a rich dataset for use in exploratory work. That is, while I appreciate the (obviously significant) work involved in developing and proposing the confirmatory hypothesis tests, I also want to highlight the usefulness of this undertaking for hypothesis generation. Here, of course, I will offer a few suggestions and comments that might be worth considering before undertaking this large project. In no particular order:

1. I'd like to know more about how songs were chosen. I'm pretty happy with the conditions -- singing/recitation/description/instrumental seem likely to give good samples across the speech-song spectrum -- but I do wonder how much the data from a given language will depend on the specific song that was chosen. I think the prompt in the recording protocol is good, but... do we know, for example, if songs that are "one of the oldest/ most “traditional” (loosely defined) / most familiar to your cultural background" are also representative? (Like, I might worry that the oldest ones are actually a bit unusual such that they stand out in some way.) Relatedly, it might be good to constrain (or at least assess) the type of song. I could imagine systematic differences between, say, lullabies and celebratory music and maybe some of those differences might also show up in their similarity to speech. I don't have reason to think this is likely to be a systematic problem, but I feel like some discussion and/or some plan to assess the "representativeness" of the songs that were chosen might be important.

2. I was also wondering how these 23 language families were chosen. The answer seems to be mostly opportunistically, which I think is fine given that there's a plan to assess bias in the languages sampled. I wonder if another way to deal with this issue (rather than just sampling a subset of samples that are more balanced) might be a random-effects meta-analysis model, where languages can be nested into language families. I am no expert on meta-analysis, but I believe this is one way that meta-analyses deal with non-independent effects without having to exclude large swaths of data.

3. It took me a little while to understand how "sameness" would be tested here. On my first read, I was pretty confused (I think in part this is because the manuscript says, when describing the SESOI, "The basic idea is to analyze whether the feature differs between song and speech," which... sounds like a difference test not a 'sameness' test.) The logic is explained much more clearly a bit later, at the bottom of p. 10, but I'd suggest making the logic more clear upfront.

More importantly, I'd like to see a bit more justification about why the SESOI is what it is here. I recognize that d=.4 is a "normal" effect size in behavioral research, but this isn't really a standard behavioral study and so... is that effect size a normal/small one for acoustic differences? This isn't just a point of curiosity because the extent to which lack of differences for Hypotheses 4-6 can be taken to support cross-cultural regularities really depends on what counts as similar within those domains. I don't know the best way to choose or justify a SESOI here, but perhaps one simple approach might be to give the reader an intuitive sense by offering examples of the largest differences (in timbral brightness, pitch interval size, and pitch declination) that would be considered the same under this SESOI (and, conversely, examples of the smallest differences that would be considered *not* the same). Maybe this SESOI could also be justified based on the variability within languages, on the idea that the level of variability that exists within songs/sentences in a language isn't likely to be meaningfully different between languages or something like that. (Obviously that would be hugely impractical to do for all these languages, but maybe just using one or two as a way to estimate between-song/speech variability?) I suppose yet another alternative might be to rely on Bayes Factors, but I'm not sure that actually solves the issue because it would still require justifying the choice of priors which might be even less straightforward than justifying a SESOI.

4. I was also a little confused about the segmentation. The introduction notes that each recording will be manually annotated by the coauthor who recorded it, but later on the methods section notes that "Those annotations will be created by the first author (Ozaki) because the time required to train and ask each collaborator to create these annotations would not allow us to recruit enough collaborators for a well-powered analysis." (I think maybe this is referring to the pilot data vs. the proposed data, but I am not totally sure.) Along these lines, I appreciated the assessment of interrater reliability for the pilot data, but I wonder if it might be important to get some measure of reliability from the to-be-analyzed data as well. (Like, the analysis of pilot data helps assess confounds in how people segment their own recordings, but if one author does all recordings, might there be some differences in languages that author knows well vs. does not know or something like that?) I'm sure it would be impractical to have multiple raters for all items, but perhaps some subset (or excerpts of some subset, as in the pilot reliability analyses) could be segmented by two people to assess reliability?

5. The power analysis seems reasonable enough to me, except doesn't the power here depend on the specific languages the recordings come from? (like, shouldn't it be about the necessary variability of language families or whatever rather than just overall number of recordings?)

These points aside, I think this is a really interesting proposal that is a great fit for a registered report and I look forward to seeing how it develops!

https://doi.org/10.24072/pci.rr.100316.rev11Reviewed by anonymous reviewer 1, 11 Nov 2022

1A. The scientific validity of the research question(s)

-- The question addressed here is how speech and songs differ in their acoustic properties. The question is certainly interesting.

1B. The logic, rationale, and plausibility of the proposed hypotheses (where a submission proposes hypotheses)

-- The hypotheses are driven by the pilot analysis and are valid.

1C. The soundness and feasibility of the methodology and analysis pipeline (including statistical power analysis or alternative sampling plans where applicable)

-- The methods are largely valid. However, it's of course not ideal to only sample data from co-authors, since co-authors may differ from the randomly sampled participants in many different ways even if they are not aware of the hypotheses being tested. Why not just ask the co-authors to acquire some speech and songs in their native language and do a control analysis on those audios? This approach seems particularly plausible since the current manuscript mainly analyzed songs and spoken descriptions, which are not matched in the content.

A related issue: I'm not sure if it's valid to include audio contributors who are not even aware the hypotheses of the study as co-authors.

1D. Whether the clarity and degree of methodological detail is sufficient to closely replicate the proposed study procedures and analysis pipeline and to prevent undisclosed flexibility in the procedures and analyses

-- Yes but the statistics should be improved in the final version.

1E. Whether the authors have considered sufficient outcome-neutral conditions (e.g. absence of floor or ceiling effects; positive controls; other quality checks) for ensuring that the obtained results are able to test the stated hypotheses or answer the stated research question(s).

-- I don't think there are such issues.

https://doi.org/10.24072/pci.rr.100316.rev12