Announcements

Please note: To accommodate reviewer and recommender holiday schedules, we will be closed to submissions from 1st July — 1st September. During this time, reviewers will be able to submit reviews and recommenders will issue decisions, but no new or revised submissions can be made by authors. The one exception to this rule is that authors using the scheduled track who submit their initial Stage 1 snapshot prior to 1st July can choose a date within the shutdown period to submit their full Stage 1 manuscript.

We are recruiting recommenders (editors) from all research fields!

Your feedback matters! If you have authored or reviewed a Registered Report at Peer Community in Registered Reports, then please take 5 minutes to leave anonymous feedback about your experience, and view community ratings.

Latest recommendations

| Id | Title * | Authors * | Abstract * | Picture | Thematic fields * | Recommender | Reviewers | Submission date | |

|---|---|---|---|---|---|---|---|---|---|

22 Jul 2024

STAGE 1

Replication of “Carbon-Dot-Based Dual-Emission Nanohybrid Produces a Ratiometric Fluorescent Sensor for In Vivo Imaging of Cellular Copper Ions”Maha Said, Mustafa Gharib, Samia Zrig, Raphaël Lévy https://osf.io/kf9qe/Replicating, Revising and Reforming: Unpicking the Apparent Nanoparticle Endosomal Escape ParadoxRecommended by Emily Linnane and Yuki Yamada based on reviews by Cecilia Menard-Moyon and Zeljka Krpetic based on reviews by Cecilia Menard-Moyon and Zeljka Krpetic

Context

Over the past decade there has been an exponential increase in the number of research papers utlising nanoparticles for biological applications such as intracellular sensing [1, 2], theranostics [3-5] and more recently drug delivery and precision medicine [6, 7]. Despite the success stories, there is a disconnect regarding current dogma on issues such as nanoparticle uptake and trafficking, nanoparticle delivery via the enhanced permeability and retention (EPR) effect, and endosomal escape. Critical re-evaluation of these concepts both conceptually and experimentally is needed for continued advancement in the field.

For this preregistration, Said et al. (2024) focus on nanoparticle intracellular trafficking, specifically endosomal escape [8]. The current consensus in the literature is that nanoparticles enter cells via endocytosis [9, 10] but reportedly just 1-2% of nanoparticles/ nanoparticle probes escape endosomes and enter the cytoplasm [11-13]. There is therefore an apparent paradox over how sensing nanoparticles can detect their intended targets in the cytoplasm if they are trapped within the cell endosomes. To address this fundamental issue of nanoparticle endosomal escape, Lévy and coworkers are carrying out replication studies to thoroughly and transparently replicate the most influential papers in the field of nanoparticle sensing. The aim of these replication studies is twofold: to establish a robust methodology to study endosomal escape of nanoparticles, and to encourage discussions, transparency and a step-change in the field.

Replication of “Carbon-Dot-Based Dual-Emission Nanohybrid Produces a Ratiometric Fluorescent Sensor for In Vivo Imaging of Cellular Copper Ions”

For this replication study, the authors classified papers on the topic of nanoparticle sensing and subsequently ranked them by number of citations. Based on this evaluation they selected a paper by Zhu and colleagues [14] entitled “Carbon-Dot-Based Dual-Emission Nanohybrid Produces a Ratiometric Fluorescent Sensor for In Vivo Imaging of Cellular Copper Ions” for their seminal replication study. To determine the reproducibility of the results from Zhu et al., the authors aim to establish the proportion of endosomal escape of the nanoparticles, and to examine the data in a biological context relevant to the application of the probe.

Beyond Replication

The authors plan to replicate the exact conditions reported in the materials and methods section of the selected paper such as nanoparticle probe synthesis of CdSe@C-TPEA nanoparticles, assessment of particle size, stability and reactivity and effect on cells (TEM, pH experiments, fluorescent responsivity to metal ions and cell viability). In addition, Said et al., plan to include further experimental characterisation to complement the existing study by Zhu and colleagues. They will incorporate additional controls and methodology to determine the intracellular location of nanoparticle probes in cells including: quantifying excess fluorescence in the culture medium, live cell imaging analysis, immunofluorescence with endosomal and lysosomal markers, and electron microscopy of cell sections. The authors will also include supplementary viability studies to assess the impact of the nanoparticles on HeLa cells as well as an additional biologically relevant cell line (for use in conjunction with the HeLa cells as per the original paper).

The Stage 1 manuscript underwent two rounds of thorough in-depth review. After considering the detailed responses to the reviewers' comments, the recommenders determined that the manuscript met the Stage 1 criteria and awarded in-principle acceptance (IPA).

The authors have thoughtfully considered their experimental approach to the replication study, whilst acknowledging any potential limitations. Given that conducting such a replication study is novel in the field of Nanotechnology and there is currently no ‘gold standard’ approach in doing so, the authors have showed thoughtful regard of statistical analysis and unbiased methodology where possible.

Based on current information, this study is the first use of preregistration via Peer Community in Registered Reports and the first formalised replication study in Nanotechnology for Biosciences. The outcomes of this of this study will be significant both scientifically and in the wider context in discussion of the scientific method.

URL to the preregistered Stage 1 protocol: https://osf.io/qbxpf

Level of bias control achieved: Level 6. No part of the data or evidence that will be used to answer the research question yet exists and no part will be generated until after IPA. List of eligible PCI RR-friendly Journals:

References

1. Howes, P. D., Chandrawati, R., & Stevens, M. M. (2014). Colloidal nanoparticles as advanced biological sensors. Science, 346(6205), 1247390. https://doi.org/10.1126/science.1247390

2. Liu, C. G., Han, Y. H., Kankala, R. K., Wang, S. B., & Chen, A. Z. (2020). Subcellular performance of nanoparticles in cancer therapy. International Journal of Nanomedicine, 675-704. https://doi.org/10.2147/IJN.S226186

3. Tang, W., Fan, W., Lau, J., Deng, L., Shen, Z., & Chen, X. (2019). Emerging blood–brain-barrier-crossing nanotechnology for brain cancer theranostics. Chemical Society Reviews, 48(11), 2967-3014. https://doi.org/10.1039/C8CS00805A

4. Yoon, Y. I., Pang, X., Jung, S., Zhang, G., Kong, M., Liu, G., & Chen, X. (2018). Smart gold nanoparticle-stabilized ultrasound microbubbles as cancer theranostics. Journal of Materials Chemistry B, 6(20), 3235-3239. https://doi.org/10.1039%2FC8TB00368H

5. Lin, H., Chen, Y., & Shi, J. (2018). Nanoparticle-triggered in situ catalytic chemical reactions for tumour-specific therapy. Chemical Society Reviews, 47(6), 1938-1958. https://doi.org/10.1039/C7CS00471K

6. Hou, X., Zaks, T., Langer, R., & Dong, Y. (2021). Lipid nanoparticles for mRNA delivery. Nature Reviews Materials, 6(12), 1078-1094. https://doi.org/10.1038/s41578-021-00358-0

7. Mitchell, M. J., Billingsley, M. M., Haley, R. M., Wechsler, M. E., Peppas, N. A., & Langer, R. (2021). Engineering precision nanoparticles for drug delivery. Nature Reviews Drug Discovery, 20(2), 101-124. https://doi.org/10.1038/s41573-020-0090-8

8. Said, M., Gharib, M., Zrig, S., & Lévy, R. (2024). Replication of “Carbon-Dot-Based Dual-Emission Nanohybrid Produces a Ratiometric Fluorescent Sensor for In Vivo Imaging of Cellular Copper Ions”. In principle acceptance of Version 3 by Peer Community in Registered Reports. https://osf.io/qbxpf

9. Behzadi, S., Serpooshan, V., Tao, W., Hamaly, M. A., Alkawareek, M. Y., Dreaden, E. C., ... & Mahmoudi, M. (2017). Cellular uptake of nanoparticles: Journey inside the cell. Chemical Society Reviews, 46(14), 4218-4244. https://doi.org/10.1039/C6CS00636A

10. de Almeida, M. S., Susnik, E., Drasler, B., Taladriz-Blanco, P., Petri-Fink, A., & Rothen-Rutishauser, B. (2021). Understanding nanoparticle endocytosis to improve targeting strategies in nanomedicine. Chemical society reviews, 50(9), 5397-5434. https://doi.org/10.1039/D0CS01127D

11. Smith, S. A., Selby, L. I., Johnston, A. P., & Such, G. K. (2018). The endosomal escape of nanoparticles: toward more efficient cellular delivery. Bioconjugate Chemistry, 30(2), 263-272. http://dx.doi.org/10.1021/acs.bioconjchem.8b00732

12. Cupic, K. I., Rennick, J. J., Johnston, A. P., & Such, G. K. (2019). Controlling endosomal escape using nanoparticle composition: current progress and future perspectives. Nanomedicine, 14(2), 215-223. https://doi.org/10.2217/nnm-2018-0326

13. Wang, Y., & Huang, L. (2013). A window onto siRNA delivery. Nature Biotechnology, 31(7), 611-612. https://doi.org/10.1038/nbt.2634

14. Zhu, A., Qu, Q., Shao, X., Kong, B., & Tian, Y. (2012). Carbon-dot-based dual-emission nanohybrid produces a ratiometric fluorescent sensor for in vivo imaging of cellular copper ions. Angewandte Chemie (International ed. in English), 51(29), 7185-7189. https://doi.org/10.1002/anie.201109089

| Replication of “Carbon-Dot-Based Dual-Emission Nanohybrid Produces a Ratiometric Fluorescent Sensor for In Vivo Imaging of Cellular Copper Ions” | Maha Said, Mustafa Gharib, Samia Zrig, Raphaël Lévy | <p>In hundreds of articles published over the past two decades, nanoparticles have been described as probes for sensing and imaging of a variety of intracellular cytosolic targets. However, nanoparticles generally enter cells by endocytosis with o... |  | Life Sciences, Medical Sciences, Physical Sciences | Emily Linnane | 2023-11-29 19:14:03 | View | |

22 Jul 2024

STAGE 1

Probing the dual-task structure of a metacontrast-masked priming paradigm with subjective visibility judgmentsCharlott Wendt, Guido Hesselmann https://osf.io/9gakq?view_only=a5e90e4db4b545e9956b8359595c013bDo trial-wise visibility reports - and how these reports are made - alter unconscious priming effects?Recommended by D. Samuel Schwarzkopf based on reviews by Markus Kiefer, Thomas Schmidt and 3 anonymous reviewers based on reviews by Markus Kiefer, Thomas Schmidt and 3 anonymous reviewers

Many studies of unconscious processing measure priming effects. Such experiments test whether a prime stimulus can exert an effect on speeded responses to a subsequently presented target stimulus even when participants are unaware of the prime. In some studies, participants are required to report their awareness of the prime in each trial - a dual-task design. Other studies conduct such visibility tests in separate experiments, so that the priming effect is measured via a single task. Both these approaches have pros and cons; however, it remains unclear to what extent they can affect the process of interest. Can the choice of experimental design and its parameters interfere with the priming effect? This could have implications for interpreting such effects, including in previous literature.

In the current study, Wendt and Hesselmann (2024) will investigate the effects of using a dual-task design in a masked priming paradigm, focusing on subjective visibility judgments. Based on power analysis, the study will test 34 participants performing both single-task and several dual-task conditions to measure reaction times and priming effects. Priming is tested via a speeded forced-choice identification of a target. The key manipulation is the non-speeded visibility rating of the prime using a Perceptual Awareness Scale, either with a graded (complex) rating or a dichotomous response. Moreover, participants will either provide their awareness judgement via a keyboard or vocally. Finally, participants will also complete a control condition to test prime visibility by testing the objective identification of the prime. These conditions will be presented in separate blocks, with the order randomised across participants. The authors hypothesise that using a dual-task slows down response times and boosts priming effects. However, they further posit that keyboard responses and graded visibility ratings, respectively, in the dual task reduce priming effects (but also slow response times) compared to vocal responses and dichotomous visibility judgements. In addition to the preregistered hypotheses, the study will also collect EEG data to explore the neural underpinnings of these processes.

The Stage 1 manuscript went through three rounds of review by the recommender and five expert reviewers. While the recommender would have preferred to see targeted, directional hypotheses explicitly specified in the design instead of non-directional main effects/interactions, he nevertheless considers this experimental design ready for commencing data collection, and therefore granted in-principle acceptance.

URL to the preregistered Stage 1 protocol: https://osf.io/ds2w5

Level of bias control achieved: Level 6. No part of the data or evidence that will be used to answer the research question yet exists and no part will be generated until after IPA. List of eligible PCI RR-friendly Journals:

References Wendt, C. & Hesselmann, G. (2024). Probing the dual-task structure of a metacontrast-masked priming paradigm with subjective visibility judgments. In principle acceptance of Version 4 by Peer Community in Registered Reports. https://osf.io/ds2w5 | Probing the dual-task structure of a metacontrast-masked priming paradigm with subjective visibility judgments | Charlott Wendt, Guido Hesselmann | <p>Experiments contrasting conscious and masked stimulus processing have shaped, and continue to shape, cognitive and neurobiological theories of consciousness. However, as shown by Aru et al. (2012) the contrastive approach builds on the untenabl... | Social sciences | D. Samuel Schwarzkopf | 2024-03-02 18:20:03 | View | ||

22 Jul 2024

STAGE 1

From Thought to Senses: Assessing the Presence of a Relationship Between the Generation Effect and Multisensory FacilitationMichaela Ritchie, Jonathan Wilbiks https://osf.io/preprints/psyarxiv/mk75jExploring multi-sensory benefits in the generation effect of memoryRecommended by Gidon Frischkorn based on reviews by Vanessa Loaiza, Sharon Bertsch and Vanessa Loaiza based on reviews by Vanessa Loaiza, Sharon Bertsch and Vanessa Loaiza

This study by Ritchie and Wilbiks (2024) investigates whether the generation effect, a memory advantage for self-generated verbal information, is enhanced under multisensory conditions. It is designed to explore a gap in the literature regarding the interplay between the generation effect and multisensory facilitation, with potential applications in educational settings.

Exploring multisensory aspects of the generation effect, the study has the potential to provide new insights into cognitive processing and memory enhancement. It is well rooted in established theories of the generation effect and multisensory facilitation, deducing a reasonable hypothesis that multisensory processing amplifies the generation effect. By implementing a 2 (Task Type: generate vs. read) x 3 (Sensory Modality: auditory, visual, multisensory) factorial design, it ensures a comprehensive evaluation of multisensory benefits in the generation effect. The findings could inform multisensory learning strategies in educational contexts, enhancing teaching methods and learning outcomes. Based on these considerations and two rounds of in-depth review, the recommender awarded in-principle acceptance of the study proposal. Its strong theoretical foundation and well-conceived methodology make it a valuable contribution to the fields of cognitive and educational psychology. URL to the preregistered Stage 1 protocol: https://osf.io/3u7eh

Level of bias control achieved: Level 6. No part of the data or evidence that will be used to answer the research question yet exists and no part will be generated until after IPA.

List of eligible PCI RR-friendly journals:

References

Ritchie, M. & Wilbiks, J. (2024). From Thought to Senses: Assessing the Relationship Between the Generation Effect and Multisensory Facilitation. In principle acceptance of Version 4 by Peer Community in Registered Reports. https://osf.io/3u7eh

| From Thought to Senses: Assessing the Presence of a Relationship Between the Generation Effect and Multisensory Facilitation | Michaela Ritchie, Jonathan Wilbiks | <p>The proposed study will investigate the relationship between the generation effect, a memory advantage for self-generated verbal information, and the multisensory facilitation effect, a phenomenon wherein congruent sensory inputs enhance cognit... | Social sciences | Gidon Frischkorn | 2024-02-01 18:34:15 | View | ||

12 Jul 2024

STAGE 1

Associations between anxiety-related traits and fear acquisition and extinction - an item-based content and meta-analysisMaria Bruntsch, Samuel E Cooper, Rany Abend, Marian Boor, Anastasia Chalkia, Mana Ehlers, Artur Czeszumski, Dave Johnson, Maren Klingelhöfer-Jens, Jayne Morriss, Erik Mueller, Ondrej Zika, Tina Lonsdorf https://osf.io/preprints/psyarxiv/unx7wIntegrative meta-analysis of anxiety-related traits and fear processing: bridging research to clinical applicationRecommended by Sara Garofalo based on reviews by Yoann Stussi, Luigi Degni, Marco Badioli and 1 anonymous reviewer based on reviews by Yoann Stussi, Luigi Degni, Marco Badioli and 1 anonymous reviewer

The paper aims to bridge gaps in understanding the relationship between anxiety-related traits and fear processing, with a specific focus on fear acquisition and extinction. Fear and safety processing are known to be linked to anxiety symptoms and traits such as neuroticism and intolerance of uncertainty (Lonsdorf et al., 2017; Morriss et al., 2021). However, the diversity in study focus and measurement methods makes it difficult to integrate findings into clinical practice effectively.

To address this issue, Brunsch et al. (2024) propose a systematic literature search and meta-analysis, following PRISMA guidelines, to explore these associations. They plan to use nested random effects models to analyze both psychophysiological and self-report outcome measures. Additionally, they will examine the role of different questionnaires used to assess anxiety-related traits and conduct a content analysis of these tools to evaluate trait overlaps.

Current knowledge from the literature indicates that individuals with anxiety disorders exhibit differences in fear acquisition and extinction compared to those without such disorders (Lonsdorf et al., 2017; Morriss et al., 2021). Previous meta-analyses have shown associations between anxiety traits and fear generalization/extinction, but these studies are limited in their scope and focus.

The primary aim of the research is to provide a comprehensive summary of the associations between anxiety-related traits and conditioned responding during fear acquisition and extinction across multiple measures. Another goal is to investigate whether different anxiety-related trait questionnaires yield different associations with fear and extinction learning. The authors will also conduct a content analysis to better interpret the results of their meta-analysis by examining the overlap in questionnaire content.

A secondary aim of the study is to evaluate how sample characteristics, experimental specifics, and study quality influence the associations between anxiety-related traits and fear acquisition and extinction. By addressing these aims, the study seeks to advance the understanding of fear-related processes in anxiety and inform more targeted prevention and intervention strategies.

The Stage 1 manuscript underwent two rounds of thorough review. After considering the detailed responses to the reviewers' comments, the recommender determined that the manuscript met the Stage 1 criteria and granted in-principle acceptance (IPA).

URL to the preregistered Stage 1 protocol: https://osf.io/4mndj

Level of bias control achieved: Level 3. At least some data/evidence that will be used to the answer the research question has been previously accessed by the authors (e.g. downloaded or otherwise received), but the authors certify that they have not yet observed ANY part of the data/evidence.

List of eligible PCI RR-friendly journals:

References

1. Bruntsch, M., Abend, R., Chalkia, A., Cooper, S. E., Ehlers, M. R., Johnson, D. C., Klingelhöfer-Jens, M., Morriss, J., Zika, O., & Lonsdorf, T. B. (2024). Associations between anxiety-related traits and fear acquisition and extinction - an item-based content and meta-analysis. In principle acceptance of Version 3 by Peer Community in Registered Reports. https://osf.io/4mndj

2. Lonsdorf, T. B., & Merz, C. J. (2017). More than just noise: Inter-individual differences in fear acquisition, extinction and return of fear in humans - Biological, experiential, temperamental factors, and methodological pitfalls. Neuroscience & Biobehavioral Reviews, 80, 703–728. https://doi.org/10.1016/j.neubiorev.2017.07.007

3. Morriss, J., Wake, S., Elizabeth, C., & van Reekum, C. M. (2021). I Doubt It Is Safe: A Meta-analysis of Self-reported Intolerance of Uncertainty and Threat Extinction Training. Biological Psychiatry Global Open Science, 1, 171–179. https://doi.org/10.1016/j.bpsgos.2021.05.011

| Associations between anxiety-related traits and fear acquisition and extinction - an item-based content and meta-analysis | Maria Bruntsch, Samuel E Cooper, Rany Abend, Marian Boor, Anastasia Chalkia, Mana Ehlers, Artur Czeszumski, Dave Johnson, Maren Klingelhöfer-Jens, Jayne Morriss, Erik Mueller, Ondrej Zika, Tina Lonsdorf | <p>Background: Deficits in learning and updating of fear and safety associations have been reported in patients suffering from anxiety- and stress-related disorders. Also in healthy individuals, anxiety-related traits have been linked to altered f... | Life Sciences | Sara Garofalo | Anonymous, Luigi Degni, Marco Badioli, Yoann Stussi | 2024-03-15 14:48:20 | View | |

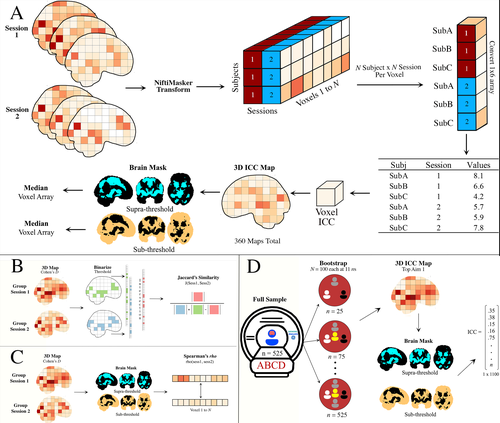

Impact of analytic decisions on test-retest reliability of individual and group estimates in functional magnetic resonance imaging: a multiverse analysis using the monetary incentive delay taskMichael I. Demidenko, Jeanette A. Mumford, Russell A. Poldrack https://www.biorxiv.org/content/10.1101/2024.03.19.585755v5Exploring determinants of test-retest reliability in fMRI: a study with the Monetary Incentive Delay TaskRecommended by Dorothy Bishop based on reviews by Xiangzhen Kong and 1 anonymous reviewer based on reviews by Xiangzhen Kong and 1 anonymous reviewer

Functional magnetic resonance imaging has been used to explore brain-behaviour relationships for many years, with proliferation of a wide range of sophisticated analytic procedures. However, rather scant attention has been paid to the reliability of findings. Concerns have been growing failures to replicate findings in some fields, but it is hard to know how far this is a consequence of underpowered studies, flexible analytic pipelines, or variability within and between participants. Demidenko et al. (2024) took advantage of the availability of three existing datasets, including the Adolescent Brain Cognitive Development (ABCD) study, the Michigan Longitudinal Study, and the Adolescent Risk Behavior Study, which all included a version of the Monetary Incentive Delay task measured in two sessions. These were entered into a multiverse analysis, which considered how within-subject and between-subject variance varies according to four analytic factors: smoothing (5 levels), motion correction (6 levels), task modelling (3 levels) and task contrasts (4 levels). They also considered how sample size affects estimates of reliability. The results have important implications for the those using fMRI with the Monetary Incentive Delay Task, and also raise questions more broadly about use of fMRI indices to study individual differences. Motion correction had relatively little impact on the ICC, and the effect size of the smoothing kernel was modest. Larger impacts on reliability were associated with choice of contrast (implicit baseline giving larger effects) and task parameterization. But perhaps the most sobering message from this analysis is that although activation maps from group data were reasonably reliable, the ICC, used as an index of reliability for individual levels of activation, was consistently low. This raises questions about the suitability of the Monetary Incentive Delay Task for studying individual differences. Another point is that reliability estimates become more stable as sample size increases; researchers may want to consider whether the trade-off between cost and gain in precision is justified for sample sizes above 250. I did a quick literature search on Web of Science: at the time of writing the search term ("Monetary Delay Task" AND fMRI) yielded 410 returns, indicating that this is a popular method in cognitive neuroscience. The detailed analyses reported here will repay study for those who are planning further research using this task. The Stage 2 manuscript was evaluated over one round of in-depth review. Based on detailed responses to the reviewer's and recommender's comments, the recommender judged that the manuscript met the Stage 2 criteria and awarded a positive recommendation. URL to the preregistered Stage 1 protocol: https://osf.io/nqgeh

Level of bias control achieved: Level 2. At least some data/evidence that was used to answer the research question had been accessed and partially observed by the authors prior to IPA, but the authors certify that they had not yet sufficiently observed the key variables within the data to be able to answer the research questions and they took additional steps to maximise bias control and rigour.

List of eligible PCI RR-friendly journals:

References 1. Demidenko, M. I., Mumford, J. A., & Poldrack, R. A. (2024). Impact of analytic decisions on test-retest reliability of individual and group estimates in functional magnetic resonance imaging: a multiverse analysis using the monetary incentive delay task [Stage 2]. Acceptance of Version 5 by Peer Community in Registered Reports. https://www.biorxiv.org/content/10.1101/2024.03.19.585755v4 | Impact of analytic decisions on test-retest reliability of individual and group estimates in functional magnetic resonance imaging: a multiverse analysis using the monetary incentive delay task | Michael I. Demidenko, Jeanette A. Mumford, Russell A. Poldrack | <p>Empirical studies reporting low test-retest reliability of individual blood oxygen-level dependent (BOLD) signal estimates in functional magnetic resonance imaging (fMRI) data have resurrected interest among cognitive neuroscientists in methods... |  | Life Sciences, Social sciences | Dorothy Bishop | 2024-03-21 02:23:30 | View | |

09 Jul 2024

STAGE 1

Test-Retest Reliability in Functional Magnetic Resonance Imaging: Impact of Analytical Decisions on Individual and Group Estimates in the Monetary Incentive Delay TaskMichael I. Demidenko, Jeanette A. Mumford, Russell A. Poldrack https://osf.io/rv859?view_only=deaf7a8d461641749f143504b2a3150bExploring determinants of test-retest reliabilty in fMRIRecommended by Dorothy Bishop based on reviews by Xiangzhen Kong and 2 anonymous reviewers based on reviews by Xiangzhen Kong and 2 anonymous reviewers

Functional magnetic resonance imaging (fMRI) has been used to explore brain-behaviour relationships for many years, with proliferation of a wide range of sophisticated analytic procedures. However, rather scant attention has been paid to the reliability of findings. Concerns have been growing following failures to replicate findings in some fields, but it is hard to know how far this is a consequence of underpowered studies, flexible analytic pipelines, or variability within and between participants.

Demidenko et al. (2023) plan a study that will be a major step forward in addressing these issues. They take advantage of the availability of three existing datasets, the Adolescent Brain Cognitive Development (ABCD) study, the Michigan Longitudinal Study, and the Adolescent Risk Behavior Study, which all included a version of the Monetary Incentive Delay task measured in two sessions. This gives ample data for a multiverse analysis, which will consider how within-subject and between-subject variance varies according to four analytic factors: smoothing (5 levels), motion correction (6 levels), task modelling (3 levels) and task contrasts (4 levels). They will also consider how sample size affects estimates of reliability. This will involve a substantial amount of analysis.

The study is essentially focused on estimation, although specific predictions are presented regarding the combinations of factors expected to give optimal reliability. The outcome will be a multiverse of results which will allow us to see how different pipeline decisions for this task affect reliability. In many ways, null results – finding that at least some factors have little effect on reliability – would be a positive finding for the field, as it would mean that we could be more relaxed when selecting an analytic pathway. A more likely outcome, however, is that analytic decisions will affect reliability, and this information can then guide future studies and help develop best practice guidelines. As one reviewer noted, we can’t assume that results from this analysis will generalise to other tasks, but this analysis with a widely-used task is an important step towards better and more consistent methods in fMRI.

The researchers present a fully worked out plan of action, with supporting scripts that have been developed in pilot testing. The Stage 1 manuscript received in-depth evaluation from three expert reviewers and the recommender. Based on detailed responses to the reviewers' comments, the recommender judged that the manuscript met the Stage 1 criteria and therefore awarded in-principle acceptance (IPA).

URL to the preregistered Stage 1 protocol: https://osf.io/nqgeh

Level of bias control achieved: Level 2. At least some data/evidence that will be used to answer the research question has been accessed and partially observed by the authors, but the authors certify that they have not yet sufficiently observed the key variables within the data to be able to answer the research questions AND they have taken additional steps to maximise bias control and rigour.

List of eligible PCI RR-friendly journals:

References 1. Demidenko, M. I., Mumford, J. A., & Poldrack, R. A. (2023). Test-Retest Reliability in Functional Magnetic Resonance Imaging: Impact of Analytical Decisions on Individual and Group Estimates in the Monetary Incentive Delay Task. In principle acceptance of Version 3 by Peer Community in Registered Reports. https://osf.io/nqgeh | Test-Retest Reliability in Functional Magnetic Resonance Imaging: Impact of Analytical Decisions on Individual and Group Estimates in the Monetary Incentive Delay Task | Michael I. Demidenko, Jeanette A. Mumford, Russell A. Poldrack | <p>Empirical studies reporting low test-retest reliability of individual neural estimates in functional magnetic resonance imaging (fMRI) data have resurrected interest among cognitive neuroscientists in methods that may improve reliability in fMR... | Life Sciences, Social sciences | Dorothy Bishop | 2023-04-17 22:27:54 | View | ||

01 Jul 2024

STAGE 1

The Influence of Bilingualism on Statistical Word Learning: A Registered ReportSimonetti, M. E., Lorenz, M. G., Koch, I., & Roembke, T. https://osf.io/2ch9yComparing statistical word learning in bilinguals and monolingualsRecommended by Elizabeth Wonnacott based on reviews by 2 anonymous reviewers based on reviews by 2 anonymous reviewers

Many studies have investigated the extent to which word learning is underpinned by statistical learning, i.e. tracking probabilistic relationships between forms and referents. Previous literature has investigated whether these processes differ in bilingual learners – who have to track two such sets of mappings in their linguistic environment. However, the evidence is mixed: some say bilinguals have a learning advantage and some find no evidence of differences.

The current study by Simonetti et al. (2024) aims to further explore this in an experiment using the cross-situational word learning paradigm. In this paradigm participants hear words and view arrays of object across a series of trials. Taking each trial in isolation the word is ambiguous, but there are consistent co-occurrences of words with referents across the trials. Two groups of participants will be compared: monolingual English speaker and English-German bilinguals. Using this paradigm, the study can track learning over time as well as looking at individual trial by trial analyses. The researchers predict specifically that bilingual learners will have a specific advantage in learning 1:2 mappings, where one-word maps to two objects. The study will use Bayes Factors as the method of inference when analysing the data, allowing them to differentiate evidence for "no difference" from ambiguous evidence from which no conclusion can be drawn.

The Stage 1 manuscript was evaluated over four rounds of review. Based on detailed responses to the reviewers' and recommender's comments, the recommender judged that the manuscript met the Stage 1 criteria and awarded in-principle acceptance (IPA).

URL to the preregistered Stage 1 protocol: https://osf.io/8n5gh

Level of bias control achieved: Level 6. No part of the data or evidence that will be used to answer the research question yet exists and no part will be generated until after IPA. List of eligible PCI RR-friendly journals: References Simonetti, M. E., Lorenz, M. G., Koch, I., & Roembke, T. C. (2024). The Influence of Bilingualism on Statistical Word Learning: A Registered Report. In principle acceptance of Version 5 by Peer Community in Registered Reports. https://osf.io/8n5gh

| The Influence of Bilingualism on Statistical Word Learning: A Registered Report | Simonetti, M. E., Lorenz, M. G., Koch, I., & Roembke, T. | <p>While statistical word learning has been the focus of many studies on monolinguals, it has<br>received little attention in bilinguals. The results of existing studies on statistical word learning<br>in bilinguals are inconsistent, with some res... | Social sciences | Elizabeth Wonnacott | 2023-06-28 15:37:58 | View | ||

27 Jun 2024

STAGE 1

Learning from comics versus non-comics material in education: Systematic review and meta-analysisMarianna Pagkratidou, Neil Cohn, Phivos Phylactou, Marietta Papadatou-Pastou, Gavin Duffy https://osf.io/preprints/metaarxiv/ceda3/Comics in EducationRecommended by Veli-Matti Karhulahti based on reviews by Adrien Fillon, Benjamin Brummernhenrich, Solip Park and Pavol Kačmár based on reviews by Adrien Fillon, Benjamin Brummernhenrich, Solip Park and Pavol Kačmár

Especially after the impactful experiments in modern comics (e.g. McCloud 1993), research interest in the medium increased with new practical developments (Kukkonen 2013). Some of these developments now manifest in educational settings where comics are used for various pedagogical purposes in diverse cultural contexts. To what degree comics are able to reach educational outcomes in comparison to other pedagogical tools remains largely unknown, however.

In the present registered report, Pagkratidou and colleagues (2024) respond to the research gap by investigating the effectiveness of educational comics materials. By means of systematic review and meta-analysis, the authors assess all empirical studies on educational comics to map out what their claimed benefits are, how the reported effectiveness differs between STEM and non-STEM groups, and what moderating effects complicate the phenomenon. With the help of large language models, all publication languages are included in analysis. The research plan was reviewed over three rounds by four reviewers with diverse sets of expertise ranging from education and meta-analytic methodology to comics culture and design. After comprehensive revisions by the authors, the recommender considered the plan to meet high Stage 1 criteria and provided in-principle acceptance. URL to the preregistered Stage 1 protocol: https://osf.io/vdr8c Level of bias control achieved: Level 3. At least some data/evidence that will be used to the answer the research question has been previously accessed by the authors (e.g. downloaded or otherwise received), but the authors certify that they have not yet observed ANY part of the data/evidence.

List of eligible PCI RR-friendly journals: References 1. Kukkonen, K. (2013). Studying comics and graphic novels. John Wiley & Sons.

2. McCloud, S. (1993). Understanding comics: The invisible art. Tundra.

3. Pagkratidou, M., Cohn, N., Phylactou, P., Papadatou-Pastou, M., & Duffy, G. (2024). Learning from comics versus non-comics material in education: Systematic review and meta-analysis. In principle acceptance of Version 4 by Peer Community in Registered Reports. https://osf.io/vdr8c

| Learning from comics versus non-comics material in education: Systematic review and meta-analysis | Marianna Pagkratidou, Neil Cohn, Phivos Phylactou, Marietta Papadatou-Pastou, Gavin Duffy | <p>The past decades have seen a growing use of comics (i.e., sequential presentation of images and/or text) educational material. However, there are inconsistent reports regarding their effectiveness. In this study, we aim to systematically review... | Social sciences | Veli-Matti Karhulahti | 2023-10-16 22:55:09 | View | ||

26 Jun 2024

STAGE 1

Do Scarcity-Related Cues Affect the Sustained Attentional Performance of the Poor and the Rich Differently?Peter Szecsi, Miklos Bognar, Barnabas Szaszi https://osf.io/5sdbpHow does economic status moderate the effect of scarcity cues on attentional performance?Recommended by Matti Vuorre based on reviews by Ernst-Jan de Bruijn and Leon Hilbert based on reviews by Ernst-Jan de Bruijn and Leon Hilbert

This Stage 1 registered report by Szecsi et al. (2024) seeks to clarify whether individuals' economic conditions moderate how scarcity cues affect their attentional performance. This idea has been previously explored: Here, the authors aim to clarify understanding of the how scarcity cues affect cognition by studying a large and diverse Hungarian sample with improved experimental methods.

Specifically, while it has been previously reported that financially less well-off individuals' are differentially affected by finance-related stimuli (e.g. Shah et al., 2018), Szecsi et al. (2024) argue that prior studies have used small samples with insufficient consideration of potentially important demographic variables. Therefore, the generalizability of prior studies might be lacking.

Second, Szecsi et al. (2024) aim to conduct a more realistic experiment by asking participants to free-associate in response to financial scarcity-related cues, whereas prior studies have often focused on simply querying for rating responses, which might not sufficiently engage the related cognitive mechanisms that could be most affected.

In the proposed study, then, the authors will rigorously test whether financially less well-off individuals have lower attentional performance while experiencing scarcity-related cues than individuals who are financially better off, and that attentional performance does not differ while experiencing non-scarcity related cues. Ultimately, Szecsi et al. propose to shed light on theories of scarcity-related cognition that posit overall decrements in attentional performance irrespective of individuals' financial status.

The Stage 1 manuscript was initially reviewed by two experts in the area, who both recommended several improvements to the study. The authors then thoroughly revised their write-up and protocol, and the two reviewers were satisfied with the substance of these revisions. Based on these evaluations, the recommender judged that the Stage 1 criteria were met and awarded in-principle acceptance. There were remaining editorial clarifications and suggestions which the authors can incorporate in their eventual Stage 2 report.

URL to the preregistered Stage 1 protocol: https://osf.io/3zdyb

Level of bias control achieved: Level 6. No part of the data or evidence that will be used to answer the research question yet exists and no part will be generated until after IPA. List of eligible PCI RR-friendly journals: References

1. Shah, A. K., Zhao, J., Mullainathan, S., & Shafir, E. (2018). Money in the mental lives of the poor. Social Cognition, 36, 4-19. https://doi.org/10.1521/soco.2018.36.1.4

2. Szecsi, P., Bognar, M., & Szaszi, B., (2024). Do Scarcity-Related Cues Affect the Sustained Attentional Performance of the Poor and the Rich Differently? In principle acceptance of Version 2 by Peer Community in Registered Reports. https://osf.io/3zdyb

| Do Scarcity-Related Cues Affect the Sustained Attentional Performance of the Poor and the Rich Differently? | Peter Szecsi, Miklos Bognar, Barnabas Szaszi | <p>Cues related to financial scarcity are commonly present in the daily environment shaping people’s mental lives. However, the results are mixed on whether such scarcity-related cues disproportionately deteriorate the cognitive performance of poo... | Social sciences | Matti Vuorre | Leon Hilbert, Ernst-Jan de Bruijn | 2024-01-18 14:29:03 | View | |

25 Jun 2024

STAGE 1

Does ‘virtuality’ affect the role of prior expectations in perception and action? Comparing predictive grip and lifting forces in real and virtual environmentsDavid J. Harris, Tom Arthur, & Gavin Buckingham https://osf.io/q3ktsThe role of prior expectations for lifting objects in virtual realityRecommended by Robert McIntosh based on reviews by 2 anonymous reviewers based on reviews by 2 anonymous reviewers

As virtual reality environments become more common, it is important to understand our sensorimotor interactions with them. In real world settings, sensory information is supplemented by prior expectations from past experiences, aiding efficient action control. In VR, the relative role of expectations could decrease due to a lack of prior experience with the environment, or increase because sensory information is impoverished or ambiguous. Harris, Arthur and Buckingham (2024) propose to test these possibilities by comparing a real-world object lifting task and a VR version in which the same objects are lifted but visual feedback is substituted by a virtual view. The experiment uses the Size-Weight Illusion (SWI) and the Material Weight Illusion (MWI). In these paradigms, the visual appearance of the object induces expectations about weight that can affect the perception of weight during lifting, and the fingertip forces generated. The degree to which the visual appearance of objects induces differences in perceived weight, and in measured fingertip forces, will index the influence of prior expectations for these two paradigms. The analyses will test whether the influence of prior expectations is lower or higher in the VR set-up than in real-world lifting. The outcomes across tasks (SWI and MWI) and measures (perceived weight, fingertip forces) will broaden our understanding of the role of predictive sensorimotor control in novel virtual environments.

After three rounds of evaluation, with input from two external reviewers, the recommender judged that the Stage 1 manuscript met the criteria for in-principle acceptance (IPA).

URL to the preregistered Stage 1 protocol: https://osf.io/36jhb

Level of bias control achieved: Level 6. No part of the data or evidence that will be used to answer the research question yet exists and no part will be generated until after IPA. List of eligible PCI RR-friendly journals:

References 1. Harris, D. J., Arthur, T., & Buckingham, G. (2024). Does ‘virtuality’ affect the role of prior expectations in perception and action? Comparing predictive grip and lifting forces in real and virtual environments. In principle acceptance of Version 4 by Peer Community in Registered Reports. https://osf.io/36jhb

| Does ‘virtuality’ affect the role of prior expectations in perception and action? Comparing predictive grip and lifting forces in real and virtual environments | David J. Harris, Tom Arthur, & Gavin Buckingham | <p>Recent theories in cognitive science propose that prior expectations strongly influence how individuals perceive the world and control their actions. This influence is particularly relevant in novel sensory environments, such as virtual reality... | Life Sciences | Robert McIntosh | Ben van Buren | 2023-11-22 12:25:57 | View |

MANAGING BOARD

Chris Chambers

Zoltan Dienes

Corina Logan

Benoit Pujol

Maanasa Raghavan

Emily S Sena

Yuki Yamada